Computer learns to play games itself, using only the pixels and the game score as inputs

One step closer to HAL 9000, the famous character and primary antagonist of 2001: A Space Odyssey: Last week the journal Nature published a study in which researchers of the Google owned company Deep Mind report on their progress in artificial intelligence.

From the Nature paper (emphasis mine):

We demonstrate that the deep Q-network agent, receiving only the pixels and the game score as inputs, was able to surpass the performance of all previous algorithms and achieve a level comparable to that of a professional human games tester across a set of 49 games.

This was done using…

… recent advances in training deep neural networks to develop a novel artificial agent, termed a deep Q-network, that can learn successful policies directly from high-dimensional sensory inputs using end-to-end reinforcement learning. We tested this agent on the challenging domain of classic Atari 2600 games. This work bridges the divide between high-dimensional sensory inputs and actions, resulting in the first artificial agent that is capable of learning to excel at a diverse array of challenging tasks.

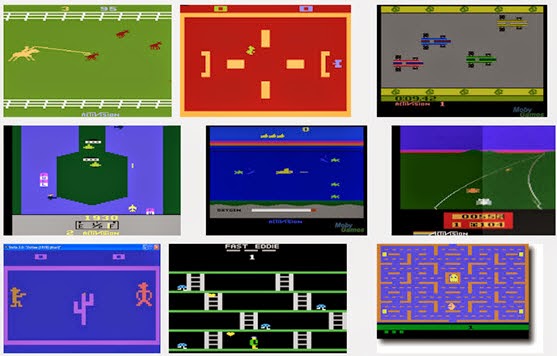

So according to the paper the software Deep Mind is now able to cope with 49 Atari games successfully. It includes classic games from the Atari 2600 console as “Breakout,” “Video Pinball” and “Space Invaders”. The Atari 2600 was released 1977 and popular in the eighties.

In other words, unlike previous game playing software like Deep Blue (chess) or IBM’s Watson (won the quiz show Jeopardy!) DeepMind is not pre-programmed with the rules of the game and specific strategies. Its only input are the raw pixels of the game, the achieved high-score – and plenty of time for trial and error:

What did they make different?

First they used a biologically inspired mechanism termed experience replay that randomizes over the data, thereby removing correlations in the observation sequence and smoothing over changes in the data distribution. Second, they used an iterative update that adjusts the action-values (Q) towards target values that are only periodically updated, thereby reducing correlationswith the target.

(I don’t claim to understand this paragraph, but copied it from the Nature paper to show this stuff is complex.)

To evaluate their DQN agent, they took advantage of the Atari 2600 platform, which offers a diverse array of tasks (n=49). This part, I fully understand.

The next steps? According to DeepMind’s founder Demis Hassabis the Deep Mind team is working to improve the artificial intelligence (AI) of their software so that it can also play computer games from the nineties (Atari 2600 = eighties). A more ambitious long-term goal is the mastery of three-dimensional car trips as in “Grand Theft Auto” games. Demis Hassabis said in the “New York Times” that he considers this goal to be reachable within the next five years. And if something can control a car in a racing game, “it should theoretically be able to control a car” in the physical world.

Further reading:

- 2015: Nature Human-level control through deep reinforcement learning (Paywall)

- 2013: Playing Atari with Deep Reinforcement Learning (earlier version of the same work, using “only” seven Atari games)

- 1992(!): TD-Gammon - achieved a level of play just slightly below that of the top human backgammon players of the time. It explored strategies that humans had not pursued and led to advances in the theory of correct backgammon play.